The Microsoft leak, which stemmed from AI researchers sharing open-source coaching knowledge on GitHub, has been mitigated.

Microsoft has patched a vulnerability that uncovered 38TB of personal knowledge from its AI analysis division. White hat hackers from cloud security company Wiz found a shareable hyperlink based mostly on Azure Statistical Evaluation System tokens on June 22, 2023. The hackers reported it to the Microsoft Security Response Center, which invalidated the SAS token by June 24 and changed the token on the GitHub web page, the place it was initially positioned, on July 7.

Soar to:

SAS tokens, an Azure file-sharing function, enabled this vulnerability

The hackers first found the vulnerability as they looked for misconfigured storage containers throughout the web. Misconfigured storage containers are a identified backdoor into cloud-hosted knowledge. The hackers discovered robust-models-transfer, a repository of open-source code and AI fashions for picture recognition utilized by Microsoft’s AI analysis division.

The vulnerability originated from a Shared Entry Signature token for an inside storage account. A Microsoft worker shared a URL for a Blob retailer (a kind of object storage in Azure) containing an AI dataset in a public GitHub repository whereas engaged on open-source AI studying fashions. From there, the Wiz group used the misconfigured URL to amass permissions to entry the whole storage account.

When the Wiz hackers adopted the hyperlink, they had been in a position to entry a repository that contained disk backups of two former workers’ workstation profiles and inside Microsoft Groups messages. The repository held 38TB of personal knowledge, secrets and techniques, personal keys, passwords and the open-source AI coaching knowledge.

SAS tokens don’t expire, so that they aren’t usually beneficial for sharing essential knowledge externally. A September 7 Microsoft security blog identified that “Attackers could create a high-privileged SAS token with lengthy expiry to protect legitimate credentials for an extended interval.”

Microsoft famous that no buyer knowledge was ever included within the info that was uncovered, and that there was no threat of different Microsoft companies being breached due to the AI knowledge set.

What companies can study from the Microsoft knowledge leak

This case isn’t particular to the truth that Microsoft was engaged on AI coaching — any very giant open-source knowledge set may conceivably be shared on this approach. Nevertheless, Wiz identified in its weblog submit, “Researchers acquire and share large quantities of exterior and inside knowledge to assemble the required coaching info for his or her AI fashions. This poses inherent safety dangers tied to high-scale knowledge sharing.”

Wiz prompt organizations seeking to keep away from related incidents ought to warning workers towards oversharing knowledge. On this case, the Microsoft researchers may have moved the general public AI knowledge set to a devoted storage account.

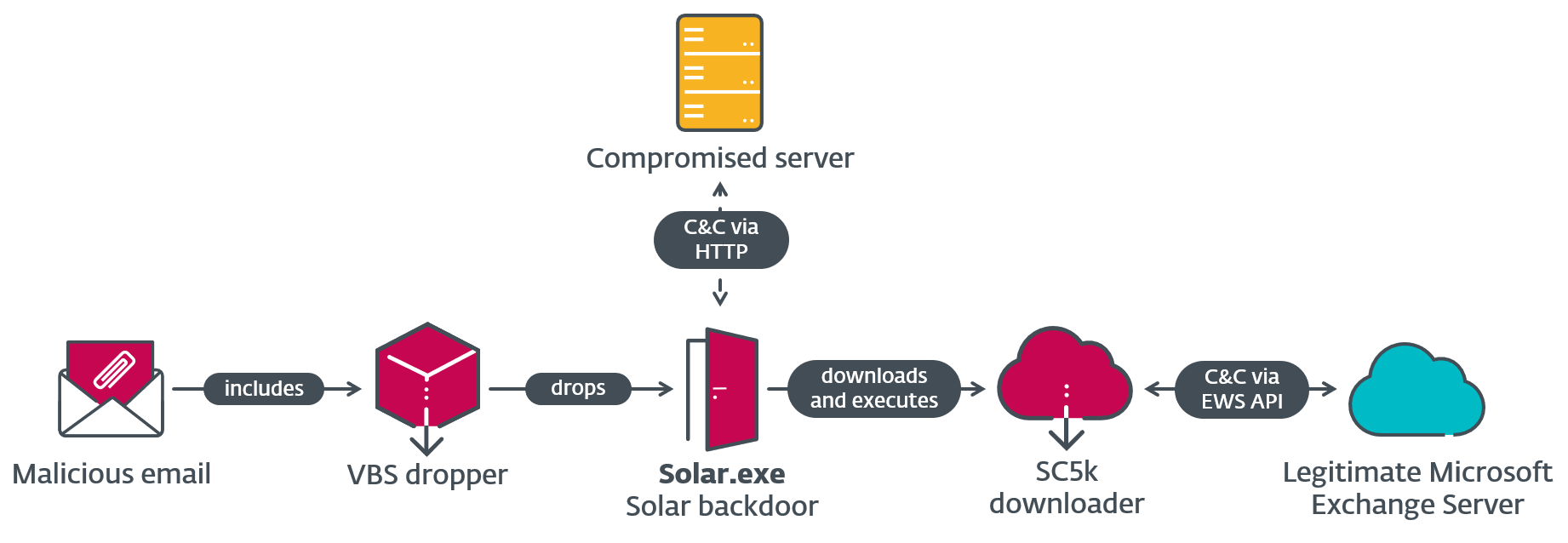

Organizations ought to be alert for provide chain assaults, which might happen if attackers inject malicious code into recordsdata which are open to public entry by way of improper permissions.

SEE: Use this guidelines to ensure you’re on prime of community and techniques safety (TechRepublic Premium)

“As we see wider adoption of AI fashions inside corporations, it’s essential to lift consciousness of related safety dangers at each step of the AI improvement course of, and ensure the safety group works intently with the info science and analysis groups to make sure correct guardrails are outlined,” the Wiz group wrote of their weblog submit.

TechRepublic has reached out to Microsoft and Wiz for feedback.